Contract Bid and Technology Proposal Evaluation in Project Management

Abstract

This white paper focuses on the management perspective of different project proposal evaluation methodologies in the procurement processes. Our goal is to introduce in details as to how proposal evaluations take place in procurement management processes using both literatures and industrial experience. We list out building blocks of the process, types of models and methodologies, evaluate and unified different processes and models. We also highlight insights on what you can do to win the contracts. The references are mostly from Information Technology industry and academia literature.

The in depth technical, environmental evaluation attributes will only be highlighted in this paper. The details would not be addressed in this paper.

Background

What is a technology proposal evaluation? This is too broad just to write one paper and describe all the varieties. In short, I would like to highlight some problem statements we can see in our real life?

For example,

- Which consulting companies we should choose to set up our system solutions?

- Which vendor products we should purchase for a particular purpose and why?

- What is the better software and application architecture between A and B?

- What is a better solution architecture and why?

- What is a better OS with better hardware with better DBMS in better server farms to host this mission critical application?

- Is it still a good architecture/technology after disasters/pitfalls happened? (Out of scope of this paper)

Although there are many technical details involved in making such decisions, we focus on what managers should do to make those decisions. Managers make such decision heavily based on technical advice from architects and independent consultants. What technical attributes an architect should consider will be discussed in another paper.

How bidding and proposal evaluation fits in the procurement process?

Above is the procurement process in the Project Management Body of Knowledge (PMBOK) 4 th version [1]. We are somewhere between evaluation plan procurements and conduct procurements and how evaluation fits in the process.

What are the Building Blocks?

1) Input and Output:

- Input -> We would like to buy something or to do something

- Output -> We choose/buy vendor A/company A to do this projects.

2) Involving Parties

Concept of Stakeholder, people related to the project [2]. I will categories how the decision was made based on three forces. Following is the “Three Parties Model”.

- The people who pay the money (financial and political perspective)

- The people who use the technology (usage and business perspective)

- The people who build and manage the technology (technology development, project and operation management)

- Independent evaluation team (neutral and external, not counted in the three parties)

The people who pay the money have the biggest power. Cost involves the cost of building the solution, and also the cost of managing the solution in the long term. The Sponsors won’t pay the money unless they have good inputs from the other parties.

The ultimate driver of the technology is the business user. However the feedback from users is benefits driven and not cost driven. Their requirements may end up costing the Sponsors a lot of money, as well as costing developers and administrators lots of time and effort.

3) Types of Evaluation Tools and Methods

– Questionnaires, prototype, simulation, analytical, data collection, data analysis, hypothesis, force field analysis, and hierarchy decision process, or weighted average metrics, iterative feedback

4) Principles

Cost and benefits (ROI) [2]

- Cost – how much I need to pay

- Benefits

- Make money

- Save money

- Look good

- Feel good

5) Most simple high-level steps

- Develop criteria and define requirement

- Request for proposals (open for bids)

- Evaluation and selections

- Resulting contract clauses, terms and conditions

6) Bidder Instructions and Guidelines

- Bidder Instructions and Information

- Proposal requirements

- Evaluation and selection criteria.

- Additional requirements

- Resulting contract clauses

- Appendix

Bidder Instructions and Guidelines

(Details from Federal Government Agencies)

| Bidder Instructions and Information |

|

| Proposal requirements |

|

| Evaluation and selection criteria |

|

| Additional requirements |

|

| Resulting contract clauses |

|

| Appendix |

|

Request for Proposal

A request for proposal (referred to as RFP) is an invitation for suppliers, often through a bidding process, to submit a proposal on a specific commodity or service.

Principles, Request for Proposal

- Understand request

- Financial proposals

- Technical proposals

- Article to article response (compliance, non compliance, not applicable)

- Terms and conditions proposals

- Proven financial and technical track record

RFP Examples

Request for Proposal Example 1 (Another Canadian University)

- Purpose of the RFP

- Company background (Some statistical information of users)

- Goal of having a solution at the organization. (Vision)

- Key objectives (disclosed evaluation criteria) set of high level characteristics, such as improve administration, improve security, minimize complexity, increase efficiency, improve overall management, improve compliance, leverage standards, position for the future, enable integration.

- Scope related to other external components with other enterprise

- Acceptance Test (proof of concept). If award contract, (insurance, employee liability insurance)

- Function requirement/specification

Request for Proposal Example 2 (Canadian University)

- Company profile.

- Understanding client objectives. (Vision)

- Company meets client objective (security best practice and approach and methodology, and proven track record)

- Offer a property methodology, successful IDM solution and team of high skilled professionals.

- Approach to project management

Request for Proposal Example 3 (US State Library)

- Demonstration of understanding of scope of project and purposes, address vendor strategy to meet each requirement, functionality exists in vendor’s system, vendor strategy for maintaining and supporting the system, describe the resources currently available and to be dedicated to ongoing support for this software

- Link to demonstrate both compliant search target

- Demonstration of the system, (bidder conference)

Different evaluation methodologies and comparison

Simplistic Industrial Models

Canadian Federal Government Agency (Evaluation and selection methodology)

- Phase 1: Terms and condition, financial and technical proposal, evaluation of mandatory requirements.

- Phase 2: Evaluation against the rated requirements.

- Phase 3: Financial Evaluation

- Phase 4: Ranking of bids – both technical merits and price based on highest combined rating of technical merit and price, where technical merits is 25% and price is 75% of total points. Lowest technical compliance proposal will be chosen.

- Phase 5: Proof of proposal – test phase (10 working days), evaluation will document the result, determine the proposed solution meet mandatory requirement of RFP.

- Phase 6: Contractor selection – (met all mandatory requirement, overall passed mark of the rated criteria, overall combination price and technical merit, has passed the POC test), would granted an award.

Canadian University (Evaluation and selection methodology)

- Initial selection: Vendors should summarize how each component or relevant service in the product family addresses the requirements outline.

- Selected few submissions: Technical Q & A session -> final check lists preferred vendor selection (metrics), (features and performance (25%), reputation and viability (25%), total cost of ownership (50%).

Complex Theoretical Models and processes

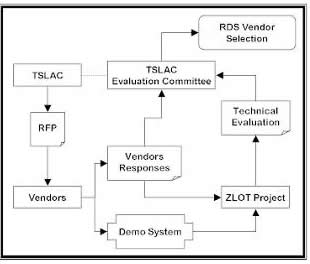

US State Library , “Technical evaluation methodology for RFP demonstration Systems and RFP written responses. University of North Texas” [3]

Legal Software: extension the ISO 14598 evaluation process with a novel evaluation framework – ” A process for evaluating legal knowledge-based systems based upon the Context Criteria Contingency-guidelines Framework” (complex)

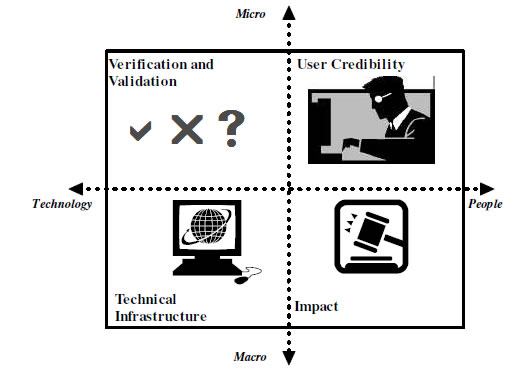

- Four quadrant model

- Verification criteria: Canvas aspects of technology ‘in the small’ i.e. at the program rather than the infrastructure level

- Credibility and acceptability of a system to people ‘in the small’ i.e. at the individual rather than organizational level.

- Criteria from this quadrant are concerned with aspects of technology ‘in the large’ or technical issues beyond the immediate program or system level

- Mainly associated with people ‘in the large’ i.e. beyond the considerations of an individual, however criteria associated with personal impacts have been included here for convenience. Criteria canvas the impact of the system upon its environment, including tasks, people, the parent organization and beyond. Personal impact criteria include effects on job satisfaction, personal productivity, motivation and morale, health, welfare and safety including stress exposure and impacts upon privacy and personal ethics. Changes in working conditions, participation and involvement, autonomy and social interaction can also be measured. Social factors, ethics and integrity, can also be canvassed

- A ‘contingency’ is the possibility that something may occur in the future. A contingency-guideline’ is a suggestion on how to deal with it.

Educational Applications of State Organization: C ollaborative process for evaluating new educational technologies

- Emphasize a pilot and incremental and iterative feedback

- There is a lot of time between pilot and the decision making

- Better and more through evaluation, but takes more time at the beginning

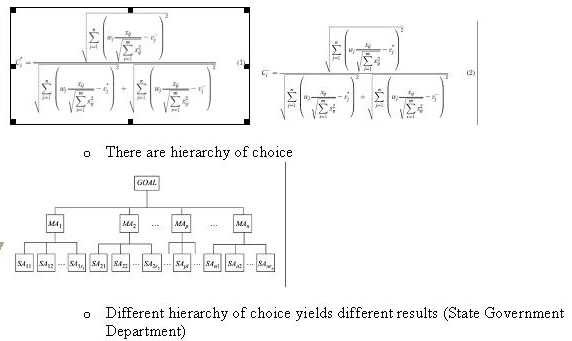

Collaborative Hierarchical fuzzy TOPSIS Model for selection among logistics information technologies [6]

- Introduce fuzziness and multi-facet-ness

- Views the problem with m alternatives as a geometric system with m points in the n-dimensional space

- Defines an index called similarity (or relative closeness) to the positive-ideal solution and the remoteness from the negative-ideal solution.

Discussion and Tips

- When we observe the typical outcomes of technology management and investment appraisal and technology there is still a wide variation in how evaluation of technology is carried out and the results that are achieved. What has been learnt so far however is that as organizational goals and circumstances change, so do/should the appraisal process. [7]

- The process and nature of evaluating and making technology decisions requires continual experience and applied knowledge in order to succeed. I fear that there is a real risk of trying to do too much, to over-analyse, to over-evaluate; thereby intrinsically increasing complexity and widening choice and uncertainty. [7]

- Technical evaluation can be very different in different situations. For example Software/application (architecture), solution systems selection, vendor selection, planned (before using) or unplanned (disasters), bidder’s evaluation, different nature of the problem may turn out to have totally different evaluation models.

- There are at least three types of stakeholders driving the evaluation. The people who pay the money, people who develop and manage the operations, and people who use the systems. They have conflicting goals although eventually they are subject to financial and political perspectives.

- The major consideration for a bid is financial. Don’t think technical merits are that important. However there are limitations of any industrial evaluation process that over emphasize the short term financial benefits, and didn’t consider enough the long-term financial benefits, which are usually related to technical merits.

- If you want to win a contract, there is an implication that you can squeeze the cost by trading off some of the technical merits.

- In order to save time and effort in considering everything, companies request a proven track record, and the financial status of the bidding contractors. This serves as a cognitive shortcut for the buyers to avoid spending effort evaluating the proposals.

- In many industrial experiences, the evaluation process is relatively short. With limited time and resource budget, it is hard to have a thorough evaluation. No one has the money to cover the cost in technical evaluation. All the evaluation methods used are relatively lightweight and only consider verification, credibility and acceptability criteria. The infrastructure and impact criteria can only be fulfilled after a procurement decision has been made.

- From some of the federally funded, large scale, or academia research based projects (mostly in the US), the evaluation process can be quite long and well funded. It emphasizes building of a pilot, phase based, incremental and iterative and collaborative methodology. It ensures all four quadrants of criteria and contingency guidelines would be considered before the contract is granted.

- There is a paradox between having a finer evaluation before or after the contract is awarded. On one hand, having a better evaluation at the beginning may save us from making the wrong architectural decision at the end and cost us tremendous money to fix or change the solutions. On the other hand, we may want to only do what is absolutely necessary at the beginning. Things may change during the implementation and doing too much at the beginning may introduce risks, hinder future decisions and be redundant.

- We may be able to put the evaluation activities in an “onion model”. In the core of the onion we have basic analytical evaluation and definition of evaluation and criteria. The next layer would include verification, credibility and acceptability; the outermost layer would include infrastructure, impact, pilot, models, iteration, collaboration, fuzziness and multi-facet-ness. The more outer the layer, the better quality and higher cost of the evaluation techniques. Enterprise can choose the sophistication of the evaluation of the process based on their needs and available budget. Also different layers of evaluation can be put into different phase of the projects, some before the bid awarded – some after awarded.

Conclusion

Contract bid and technology proposal evaluation impacts the business decision of a project, i.e. to open a project. Choosing the right methodologies to make a correct decision is important to the buyer. Hitting the important points and address the needs with the right solution design at a reasonable cost is important to the seller. Bear in mind a technology proposal evaluation is not technical. Technical evaluation is only a subset of the whole evaluation. It is driven by financial and organizational political factors. Neglecting thorough technical analysis and technical merits may end up costing you more and make your business look very bad at the end.

Reference

[1] Project Management Institute. (2008). A Guide to the Project Management Body of Knowledge (PMBOK Guide) – Fourth Edition.

[2] Len Bass, Paul Clements, Rick Kazman, Linda Northrop, Amy Zaremski. (1997). Recommended Best Industrial Practice for Software Architecture Evaluation

[3] William E, Moen, Kathleen Murray, Irene Lopatovska. (2003). Technical evaluation methodology for RFP demonstration systems and RFP written responses. University of North Texas,

[4] Maria Jean J. Hall , Richard Hall , John Zeleznikow . (2003). A process for evaluating legal knowledge-based systems based upon the Context Criteria Contingency-guidelines Framework

[5] Greta Kelly. ( 2008). collaborative process for evaluating new educational technologies

[6] Cengiz Kahraman, Nu¨ fer Yasin Ates¸, Sezi C¸ evik, Murat Gu¨lbay and S. Ayc¸a Erdog?an ( 2003) Hierarchical fuzzy TOPSIS model for selection among logistics information technologies

[7] Amir M. Sharif. (2008). Paralysis by analysis? The dilemma of choice and the risks of technology evaluation

Author

Eric Tse is an international recognized expert/consultant in Enterprise Access and Identity Management Architecture Design and Implementation. He has been working with international renowned experts in information technology in many prestigious companies. He also pursues research interests in project management, financial models, application/enterprise/solution architectures, compilation technology and philosophy of science.

Copyright Project Perfect